Table of contents

1 December 2014

Mr. Martin Dolan

Chief Commissioner

Australian Transport Safety Bureau

62 Northbourne Avenue

Canberra ACT 2601

Dear Mr. Dolan,

I am pleased to provide you with the Transportation Safety Board of Canada's (TSB) report on the independent objective peer review of the Australian Transport Safety Bureau (ATSB) investigation process and methodology.

The review was conducted in accordance with the agreed upon terms of reference as specified in our July 2013 Memorandum of Understanding. This report constitutes the complete and final deliverable.

I thank you for the trust and confidence that the ATSB has placed in the TSB by asking us to conduct this review. We were pleased to be of assistance and to share our expertise. We also appreciated the opportunity to learn from the ATSB.

Sincerely,

Original signed by Kathleen Fox

Kathleen Fox

Chair

Executive summary

Introduction

The Australian Transport Safety Bureau (ATSB) conducted an investigation into the ditching of a Westwind 1124A near Norfolk Island, Australia, that occurred on 18 November 2009. Shortly after the release of the final report (AO-2009-072) in August 2012, a television programme questioned the quality and findings of the investigation. This prompted an Australian Senate committee to conduct an inquiry into the investigation, which yielded a number of unfavourable findings and recommendations for change.

At the ATSB's request, the Transportation Safety Board of Canada (TSB) agreed to conduct an independent, objective review (called from now on the TSB Review) of the ATSB's investigation methodologies and processes. Under the terms of reference, the TSB would not reinvestigate the Norfolk Island occurrence, but would review how the investigation was conducted, and do the same for two other investigations similar in scope in order to provide a useful comparison. Consequently, the TSB Review examined these three occurrences:

- AO-2009-072 – Ditching – Israel Aircraft Westwind 1124A aircraft, VH-NGA, 5 km SW of Norfolk Island Airport, 18 November 2009 (Norfolk Island)Footnote 1

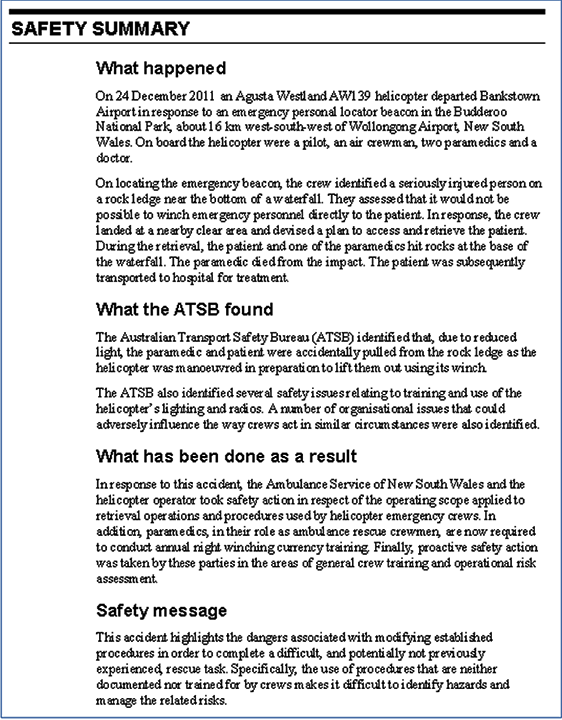

- AO-2011-166 – Helicopter winching accident involving an Agusta Westland AW139 helicopter, VH-SYZ, 16km WSW of Wollongong Airport, NSW, 24 December 2011 (Kangaroo Valley)Footnote 2

- AO-2010-043 Collision with terrain – Piper PA-31P-350, VH-PGW, 6 km NW of Bankstown Airport, NSW, 15 June 2010 (Canley Vale).Footnote 3

The review encompassed the full investigation timeline: the initial notification, the data collection phase, the analysis phase, and the production and release of the final report.

What we found

Comparison of ATSB and TSB methodologies

The organizations' methodologies have similar foundations, and focus on identifying the systemic deficiencies, individual actions, and local conditions that result in occurrences. Similarities in Australia and Canada's socio-economic cultures and legal traditions have resulted in similar enabling legislation, overall organizational structures, definitions, internal processes and robust methodologies that are linear, iterative and flexible.

The TSB Review compared the two organizations' methodologies against the standards and recommended practices outlined in Annex 13 to the International Civil Aviation Organization (ICAO) Convention on International Civil Aviation, and found they met or exceeded the intent and spirit of those prescribed.

The Norfolk Island investigation

The TSB Review of the Norfolk Island investigation revealed lapses in the application of the ATSB methodology with respect to the collection of factual information, and a lack of an iterative approach to analysis. The review also identified potential shortcomings in ATSB processes, whereby errors and flawed analysis stemming from the poor application of existing processes were not mitigated.

First of all, an early misunderstanding about the responsibilities of the Australian Civil Aviation Safety Authority (CASA) and the ATSB in the investigation was never resolved. This led to the ATSB collecting insufficient information from Pel-Air to determine the extent to which the flight planning and monitoring deficiencies observed in the occurrence existed in the company in general.

Poor data collection also hampered the analysis of specific safety issues, particularly fuel management, company and regulatory oversight, and fatigue (the ATSB does not use a specific tool to guide investigation of human fatigue).

Weaknesses in the application of the ATSB analysis framework resulted in those data insufficiencies not being addressed and potential systemic oversight issues not being analyzed. Ineffective investigation oversight resulted in issues with data collection and analysis not being identified or resolved in a timely way.

All three peer reviews conducted on the Norfolk Island draft report identified issues with respect to factual information, analysis, and conclusions. Many of these concerns were never followed up after the review process was complete. The ATSB process does not include a second-level review to ensure that feedback from peer reviews is adequately addressed.

After investigation reports have gone through peer and management review, they are sent to directly involved parties (DIPs) for comments. In the Norfolk Island investigation, the DIP process was run twice: once when the report was in its initial draft, and the second time after it had been revised. However, there is no process to ensure that the ATSB communicates its response to DIPs' comments. Formal responses to DIPs increase their understanding of the action taken in response to their submissions, and may make them more amenable to accepting the final report.

In the Norfolk Island investigation, the Commission's review of the report took place immediately after the first DIP process was completed, 31 months after the occurrence. At this stage in an investigation, it is difficult to address issues of insufficient factual information since perishable information will not be available and the collection of other information could incur substantial delays. At the ATSB, the Commission does not formally review some reports until after the DIP process is complete, and in any event, there is no robustly documented process after the Commission review to ensure that its comments are addressed before the report is finalized. Both of these aspects of the ATSB review process increase the risk that deficiencies in the scope of the investigation and the quality of the report will not be addressed.

A safety issue was identified in this investigation concerning insufficient guidance being given to flight crews on obtaining timely weather forecasts en route to help them make decisions when weather conditions at destination were deteriorating. When the safety issue was presented to CASA, it was categorized as "critical", but in the final report it was described as "minor", which caused significant concern among stakeholders. The TSB Review observed that this shifted the focus of the discussion to the label and away from the issue itself—and the potential for its mitigation.

In the final stages of the investigation, senior managers were aware of the possibility that the report would generate some controversy, but communications staff were not consulted and no communications plan was developed. Once the investigation became the subject of an external inquiry, the ATSB could no longer comment publicly on the report, which hampered the Bureau's ability to defend its reputation.

The response to the Norfolk Island investigation report clearly demonstrated that it did not address key issues in the way the Australian aviation industry and members of the public expected.

The Kangaroo Valley and Canley Vale investigations

The review of the Kangaroo Valley and Canley Vale investigations showed that when the ATSB methodology is adhered to, and the component tools and processes to challenge and strengthen analysis are applied, the result is more defensible.

In contrast to Norfolk Island, the Kangaroo Valley and Canley Vale investigations underwent regular critical reviews and used the ATSB analysis tools effectively, which gave rise to well-documented decisions, and revised data collection plans and analyses. In the Canley Vale investigation, additional information collected as a direct result of a critical review guided informed decisions with respect to the investigation of regulatory oversight.

In the Kangaroo Valley investigation, the target timeline outlined in the ATSB Safety Investigation Quality System (SIQS) for a Level 3 investigation was exceeded, despite significant effort by the team to expedite the investigation. This may indicate that these targets are unrealistic, the investigation was incorrectly classified, or that other work had influenced the published investigation schedules. Significant delays in completing an investigation increase the risk that stakeholders' expectations with respect to timeliness will not be met.

Nevertheless, because of the teams' active engagement with stakeholders in the Kangaroo Valley and Canley Vale investigations, expectations with respect to schedules were well managed and timely action was taken on safety issues.

In the Canley Vale investigation, events prior to the occurrence raised questions with respect to regulatory non-compliance and oversight. The report states that issues of regulatory non-compliance did not contribute to the occurrence, and the analysis tools were indeed effectively used to support this. However, the report could have benefitted from a more thorough discussion to clarify the underlying rationale for this conclusion.

Unlike Norfolk Island and Kangaroo Valley, the Canley Vale investigation included a closure briefing, which provided an opportunity to discuss lessons learned.

Recommendations

The TSB Review is making 14 recommendations to the ATSB in four main areas:

- Ensuring the consistent application of existing methodologies and processes

- Improving investigation methodologies and processes where they were found to have deficiencies

- Improving the oversight and governance of investigations

- Managing communications challenges more effectively.

Recommendation #1: Given that the ATSB investigation methodology and analysis tools represent best practice and have been shown to produce very good results, the ATSB should continue efforts to ensure the consistent application and use of its methodology and tools.

Recommendation #2: The ATSB should consider adding mechanisms to its review process to ensure there is a response to each comment made by a reviewer, and that there is a second-level review to verify that the response addresses the comment adequately.

Recommendation #3: The ATSB should augment its DIP process to ensure the Commission is satisfied that each comment has been adequately addressed, and that a response describing actions taken by the ATSB is provided to the person who submitted it.

Recommendation #4: The ATSB should review its risk assessment methodology and the use of risk labels to ensure that risks are appropriately described, and that the use of the labels is not diverting attention away from mitigating the unsafe conditions identified in the investigation.

Recommendation #5: The ATSB should review its investigation schedules for the completion of various levels of investigation to ensure that realistic timelines are communicated to stakeholders.

Recommendation #6: The ATSB should take steps to ensure that a systematic, iterative, team approach to analysis is used in all investigations.

Recommendation #7: The ATSB should provide investigators with a specific tool to assist with the collection and analysis of data in the area of sleep-related fatigue.

Recommendation #8: The ATSB should review the quality assurance measures adopted by the new team leaders and incorporate them in SIQS to ensure that their continued use is not dependent on the initiative of specific individuals.

Recommendation #9: The ATSB should modify the Commission report review process so that the Commission sees the report at a point in the investigation when deficiencies can be addressed, and the Commission's feedback is clearly communicated to staff and systematically addressed.

Recommendation #10: The ATSB should undertake a review of the structure, role, and responsibilities of its Commission with a view to ensuring clearer accountability for timely and effective oversight of the ATSB's investigations and reports.

Recommendation #11: The ATSB should adjust the critical investigation review procedures to ensure that the process for making and documenting decisions about investigation scope and direction is clearly communicated and consistently applied.

Recommendation #12: The ATSB should take steps to ensure closure briefings are conducted for all investigations.

Recommendation #13: The ATSB should provide clear guidance to all investigators that emphasizes both the independence of ATSB investigations, regardless of any regulatory investigations or audits being conducted at the same time, and the importance of collecting data related to regulatory oversight as a matter of course.

Recommendation #14: The ATSB should implement a process to ensure that communications staff identify any issues or controversy that might arise when a report is released, and develop a suitable communications plan to address them.

ATSB best practices to bring back to the TSB

The TSB Review identified a number of best practices and ways to potentially improve investigation operations at the TSB. An internal analysis will determine which practices could be adopted, and to what extent they could be incorporated into the TSB investigation methodology.

1.0 Introduction

1.1 Background

The Australian Transport Safety Bureau (ATSB) conducted an investigation into the ditching of a Westwind 1124A that occurred on 18 November 2009 near Norfolk Island, Australia. After the final ATSB investigation report (AO-2009-072) was released, a television program raised questions about the investigation. In the wake of this broadcast, an Australian Senate Committee conducted an inquiry, which made a number of unfavourable findings as well as several recommendations for change.

The ATSB approached the Chair of the Transportation Safety Board of Canada (TSB) and asked the TSB to conduct an independent review into ATSB investigation methodologies and processes (TSB Review). The TSB, which saw it as a mutual learning opportunity that could lead to improved investigation processes in both organizations, agreed to conduct the review.

The objectives of the TSB Review were twofold:

- Provide the ATSB with an independent, objective peer review of its investigation process/methodology and of the application of its methodology to selected occurrences (including the Norfolk Island occurrence).

- Identify best practices in both organizations that could be shared to improve existing processes and methodologies.

1.2 Terms of reference

The focus of the TSB Review was investigation process and methodology; the TSB was not to reinvestigate the Norfolk Island occurrence and would do its work independently of any other person or organization.

The terms of reference (TOR) set out five areas of inquiry for the TSB Review:

- The TSB was to conduct a comparative analysis of ATSB and TSB investigation methodologies, including the approach for risk assessment of safety issues, comparing them against the relevant provisions of Annex 13 to the Convention on International Civil Aviation. Strengths (best practices) and weaknesses (gaps) of each methodology were to be identified.

Next, the TSB was to review the Norfolk Island and other ATSB investigations to identify strengths and weaknesses in four areas:

- Application of the investigation methodology

- Management and governance of the investigation

- Investigation report process

- External communication.

The TOR established that the TSB was to be fully responsible for conducting the review and for analyzing the information collected, and to do so independently and objectively. The final report would present the independent views of the TSB.

1.3 Review methodology

The following tasks were completed as part of the TSB Review:

- Documentation review: The TSB reviewed the supporting documentation for both organizations' methodologies and processes so that it could compare them.

- Identification of ATSB investigations: The ATSB was asked to propose two investigations to the TSB Review that were similar in scope to the Norfolk Island investigation and could be usefully compared with it.

- Data and information collection: The TSB Review team collected data and information to clearly identify how the three investigations were done; sources included the following:

- Interviews with ATSB personnel: Extensive interviews were conducted with the investigators in charge of the three investigations, as well as with other investigators involved in them, and ATSB managers and commissioners.

- Workspace and investigation documentation review: The TSB was given access to the electronic workspaces for the three investigations, which allowed a thorough review of all available documentation.

- Analysis: The TSB Review team constructed a detailed sequence of events for each investigation, and compared the progress of the investigation with the processes and methods set out in ATSB documentation. Deviations from the described methodology were examined to identify the reasons for them or to identify potential best practices.

- Reporting: This report was prepared to present the findings of the TSB Review. Before the report was published, it was reviewed by a TSB steering committee set up at the TSB for this purpose and by the ATSB for factual accuracy.

2.0 Comparison of ATSB and TSB methodologies

2.1 Analytical approach

This analysis compares and contrasts the overarching methodologies used by the ATSB and the TSB, including the methodology used to assess risk associated with safety issues; its objective is to identify potential weaknesses, strengths, and best practices.

The source documents for this analysis are each organization's published methodologies.

2.2 Overview of methodologies

The ATSB and the TSB's methodologies have a similar theoretical foundation, and focus on identifying systemic deficiencies, individual actions, and local conditions that result in occurrences.

The Australian and the Canadian organizations operate in similar socio-economic cultures and legal traditions, giving rise to similar enabling legislation that prohibits assigning blame, provides privilege for on-board recordings and witness statements, and requires public reporting. There are some legislative differences, but these are outside the scope of this analysis.

These similarities and the nature of safety investigations result in the TSB and ATSB methodologies having similar definitions, internal processes, and overall structure. Both

- are generally very linear, following a path through input (occurrence), process (investigation), and output (safety communication);

- are iterative, with numerous loops back to earlier processes or repetitive applications of processes;

- allow for overlap of processes throughout an investigation; and

- use a standard, defined terminology.

Both organizations have detailed documentation of their respective methodology. The ATSB Safety Investigation Quality System (SIQS) is documented in a set of manualsFootnote 4 with a designated member of the investigation staff assigned to oversee and coordinate the administration of the system and its documentation. At the TSB, in contrast, responsibility for creating and maintaining the various documents (e.g., analysis methodology manuals, manuals of investigation, etc.) is spread throughout the modes and a multi-modal Standards and Training group.

The TSB has developed manuals for specific investigation topics, including the investigation and analysis of organizational and management influences, as well as the investigation of human fatigue, but the ATSB does not have such guidance for its investigators.

Both the ATSB and the TSB train investigators in their respective methodologies and their application. Training was outside the scope of this review.

The TSB and the ATSB have developed and implemented proprietary software applications based on their methodologies. The TSB Review found that investigators at both organizations use workarounds if the tools are cumbersome or difficult to use.

Both organizations aim to integrate their methodologies into day‑to‑day investigative work, which can be challenging. For example, the review of TSB safety analysis differs among the modal Standards and Performance groups; modes differ in the degree to which safety analysis tools are used; and there is frequently no critical review of the safety analysis before the report is drafted. At the ATSB, some IICs work with their teams to review the analysis against evidence tables before the report is drafted, which is not required by the methodology. Then, as the peer reviewers and team leader review a report, the extent to which they consult the analysis tools varies.

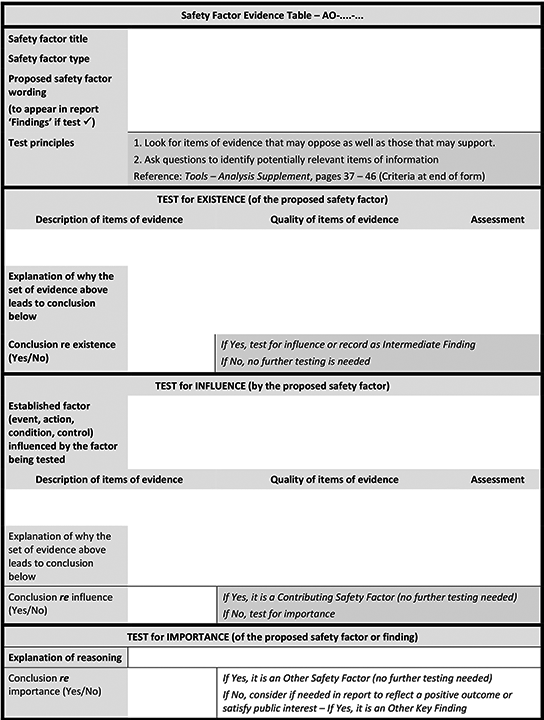

The ATSB and the TSB analysis software tools differ in that ATSB analysis makes use of embedded evidence tables to validate both the existence of safety factors and their influence on an occurrence. The evidence tables can also be used to assess the importance of safety factors that were not causal or contributory. In contrast, the TSB approach does not generally use evidence tables.Footnote 5

Both the ATSB and the TSB have exceeded target timelines in completing investigations and releasing reports.

2.3 Notification and assessment processes

Australia and Canada have legislative/regulatory requirements specifying the occurrences that must be reported. Similarly, both the ATSB and the TSB have staff assigned 24/7 to receive notifications and start assessment processes to determine the extent of response required. The organizations assess many of the same factors before making a decision whether to investigate.

The ATSB may rescind a decision to investigate if it is determined that there is no safety value to be gained from continuing the investigation, provided it issues a statement setting out the reasons for discontinuing the investigation. The Canadian Transportation Accident Investigation and Safety Board Act mandates that once an investigation is undertaken, the TSB must follow through to make findings and report publicly.

Both the ATSB and the TSB have several degrees of occurrence classification, and determine the level of investigation primarily in consideration of the potential to advance transportation safety. Other factors in the decision include the anticipated level of resources, the complexity of the investigation, the time required to complete it, the level of public interest, and the severity of the occurrence.

TSB classification levels have not been changed since 1994, and are currently under review. Reports made public (classes 2, 3, and 4) always include findings. Reports with only factual information (Class 5) are provided to coroners where applicable, but are not publicly released. In contrast, the ATSB recently added two additional occurrence classification levels; one provides for a limited-scope investigation, for which a report of factual information is published without analysis or findings.

2.4 Investigation/Data collection processes

The methodology review revealed many similarities and no significant differences in the organizations' investigation/data collection processes. For example, they

- do not commence on-site activities until it is safe for the investigation team to enter the site;

- have authority to control access to the site, and to compel persons or organizations to provide information;

- may collect data on anything that could affect safety;

- conduct data collection as an iterative process;

- collect the same types of data and have the same types of collection capabilities;

- have legislated provisions to prevent the unauthorized release of information and to prevent information from being used in courts of law or disciplinary proceedings.

2.5 Sequence of events analysis

2.5.1 Processes

The ATSB and TSB methodologies both involve the development and analysis of a sequence of occurrence events, and recognize that this work will be iterative and likely lead to collection of more information and additional analysis. In both cases, analysis starts from the sequence of occurrence events to look for higher-level deficiencies in risk controls or organizational influences. Both organizations have developed proprietary software tools to document the sequence of events and conduct the analysis.

Both methodologies include preliminary analysis, which guides the scope of the investigation and data collection. The document describing ATSB's preliminary analysis methodology is substantially more detailed than the TSB's, which is very high-level.

The ATSB makes routine use of evidence tables to validate both the existence of safety factors and their influence on an occurrence. The evidence tables can also be used to assess the importance of safety factors that were not causal or contributory. The TSB does not make regular use of evidence tables.

2.5.2 Risk assessment of safety issues

Once a systemic deficiency or safety factor has been identified, both methodologies use very similar processes to examine the risk it poses: they take into consideration existing risk controls or defences before defining the worst credible scenario (ATSB) or adverse consequence (TSB) (Table 1), and they determine the severity and likelihood of the consequence before rating the risk. The organizations differ, however, in the methods they apply to estimate the consequence component of risk.

| ATSB worst credible scenario | TSB adverse consequence |

|---|---|

| Catastrophic | Catastrophic |

| Major | Major |

| Moderate | Moderate |

| Minimal | Negligible |

The ATSB determines consequence level using two criteria:

- the severity of the occurrence (e.g., incident with no injuries versus accident involving loss of aircraft and multiple fatalities), and

- the type of transport operation involved.

According to the ATSB's guidelines, the inclusion of the type of operation "reflects the ATSB policy of prioritizing its investigation activities towards those issues that present a threat to public safety and are the subject of widespread public interest."Footnote 6 This prioritization is put into effect by applying a 4Í4 matrix, which captures the type of operation and the outcome, to help determine one of the possible four consequence levels (Table 2).Footnote 7

| Minimal | Moderate | Major | Catastrophic | |

|---|---|---|---|---|

| Air transport >5,700 kg (fare-paying passengers) | Minor incident only (e.g. birdstrike) | Incident | Accident; Serious incident; Incident with many minor injuries | Accident with multiple fatalities, or aircraft destroyed plus fatalities/serious injuries |

| Air transport >5,700 kg (freight); Air transport <5,700 kg (fare-paying passengers) | Incident | Accident; Serious incident; Incident with many minor injuries | Accident with multiple fatalities, or aircraft destroyed plus fatalities/serious injuries | N/A |

| Other commercial operations | Accident; Serious incident; Incident with many minor injuries | Fatal accident; Accident with aircraft destroyed or multiple serious injuries | N/A | N/A |

| Private operations | Accident with aircraft destroyed or multiple serious injuries | Fatal accident | N/A | N/A |

Depending on the size of aircraft involved and the type of transport operation, occurrences involving fatalities or injuries are assigned a different consequence level.

The TSB approach to determining severity of a consequence takes into account other factors in addition to type of operation and outcome; these include injury to other people or damage to property and the environment, as well as effects on commercial operations.

Another difference between the methodologies lies in the scale used to rate the likelihood of the worst credible scenario (ATSB) or adverse consequence (TSB): the ATSB uses a four‑level scale whereas the TSB uses a five‑level scale (Table 3).

| ATSB | TSB |

|---|---|

| Frequent | Frequent |

| Probable | |

| Occasional | Occasional |

| Rare | Unlikely |

| Very rare | Most improbable |

Once the risk assessment is complete, the two organizations tailor their safety communications according to risk level, with higher risk prompting higher-level communications.

2.5.3 Internal review of analysis

The ATSB methodology specifies that investigations must undergo peer, management, and Commission review before a final report is released.Footnote 8,Footnote 9 The peer review checklists require a review of all findings and the underlying evidence tables. Management review assesses the adequacy of each peer review process and the standard of the investigation report against ATSB quality objectives. However, the ATSB executive review and approval process is focused primarily on the report:

The Executive review process is essentially an approval process and detailed review of all elements of the investigation and supporting material should not be required. The Executive expects that once the Manager has recommended to the General Manager that the report is suitable for release; the Executive should only need to undertake a high level review of the report and its findings to satisfy itself of the completeness of the investigation, check standardisation aspects and consider broader safety and strategic implications associated with release of the report.Footnote 10

Processes to ensure TSB investigations are reviewed include the following:

- Occurrence workload management tools guide each investigation through the sequence of reviews.

- Modal Standards and Performance groups have the function of reviewing all investigations, including documentation of analysis.

- The TSB Manual of Investigations, Volume 3, Part 4, "Report Writing Standards", provides guidance for writing and reviewing reports, and includes the requirement at each level of review to verify that documentation is complete.

2.6 Report production processes

The two organizations' methodologies are similar in that the investigator in charge (IIC)/investigation team prepares a draft investigation report, which then undergoes review and approval processes.

As described below, the TSB has formal mechanisms to ensure that there is a response to questions or comments arising from a report review by designated reviewers and the Board—and that the responses themselves are reviewed. The ATSB has no similar mechanisms.

All TSB draft reports are reviewed by the Board before being released for external review, whereas not all ATSB draft reports are reviewed by the Commission in this phase.

Both organizations send a draft report to external parties for comment, although the ATSB distribution is slightly wider than the TSB's. While both agencies prepare responses to comments submitted by reviewers, this response process represents another substantial difference between the ATSB and TSB methodologies.

At the TSB, the IIC coordinates the preparation of a designated-reviewer response package that contains a response to each of the reviewer's comments as well as a draft of the Board decision as to whether or not the report should be revised in consequence. The response package is reviewed at peer, management, and Board levels to ensure that the response to each representation is appropriate.

Then, in compliance with paragraph 24(4)(d) of the Canadian Transportation Accident Investigation and Safety Board Act, when the final report is released, the TSB sends each designated reviewer a response package containing the responses to their particular submission.

The ATSB process requires the IIC to put the submission comments in a table along with the response to the comments. Unlike the TSB's, ATSB reviews do not include a systematic review by senior managers or the Commission of the IIC's responses; nor does the ATSB provide any feedback to submitters on how the organization responded to their comments.

2.7 External communications processes

The ATSB and TSB have processes in place to communicate safety deficiencies immediately to stakeholders. They also prioritize their safety communications according to risk: the deficiencies with the highest risk are addressed by recommendations, which are their highest level of safety communication.

In addressing safety issues, both agencies prefer encouraging relevant organizations to initiate safety action proactively to issuing formal safety recommendations or safety advisory notices. However, recommendations or safety advisories are issued in the event that safety action has not been taken or the safety action taken has not mitigated the risk to the extent the organization believes appropriate.

Both the ATSB and TSB publish recommendations on their respective websites. However, the ATSB also publishes all safety issues identified in ATSB reports in a searchable format. The ATSB tracks and assesses safety action taken to address all significant and critical safety issues, as well as action in response to safety recommendations. In contrast, the TSB formally tracks responses to recommendations, safety advisory letters, and safety information letters, but assesses responses to recommendations only.

2.8 Summary: Comparison of ATSB and TSB methodologies

The ATSB and TSB methodologies are both robust investigative systems focused on identifying and analyzing the sequence of events. They have many similarities because of their common philosophical underpinnings. Both organizations work toward ensuring their methodologies are integrated into day‑to‑day investigative work, which can be challenging.

It is the differences between the two approaches that provide opportunities to identify best practices, however. The following three differences are discussed in more detail later in this report:

- There are differences in the quality-control processes employed by the two organizations.

- The ATSB makes effective use of evidence tables that provide an excellent basis for substantiating or refuting hypotheses/findings and for writing the analysis portion of an investigation report. The TSB does not systematically use evidence tables.

- In the ATSB's risk assessment framework, the criteria used to determine the severity of consequence are more heavily influenced by the aircraft size and type of operation than they are in the approach taken by the TSB.

2.9 Comparison of TSB and ATSB methodologies with ICAO Annex 13

Australia and Canada are both signatories to the International Civil Aviation Organization (ICAO) Convention on International Civil Aviation (Chicago, 1944). The Standards and Recommended Practices for Aircraft Accident Inquiries were first adopted by ICAO on 11 April 1951 pursuant to Article 37 of the Convention, and were designated as Annex 13 to the Convention.

Contracting States are bound to apply all standards outlined in Annex 13, unless a difference has been filed. Australia has filed several differences; most are administrative, and primarily concern the protection and sharing of information in accordance with Australian law. Canada has not filed any differences against Annex 13.

The last ICAO safety oversight audit of Australia's civil aviation system in February 2008 made two findings concerning air accident and incident investigations: the selection of occurrences to be investigated, and the medical examination and toxicological testing of surviving flight crew, passengers, and involved aviation personnel after an accident. Neither of these findings was considered relevant to the TSB Review.

The methodologies and processes used by Australia and Canada are generally considered to meet or exceed the intent and spirit of the Standards and Recommended Practices in Annex 13.

3.0 Review of the Norfolk Island investigation

3.1 Factual information related to the Norfolk Island investigation

On the night of 18 November 2009, an Israel Aircraft Industries Westwind 1124A operated by Pel-Air Aviation Pty Ltd (Pel-Air) was on an aeromedical flight from Apia, Samoa, with a planned refuelling stop at Norfolk Island. The aircraft, unable to land at the aerodrome due to weather and with its fuel about to be exhausted, was ditched in the ocean approximately 3 nm west of Norfolk Island. On board were the captain, first officer, a doctor, a flight nurse, the patient, and one passenger. All were able to evacuate, and were rescued from the water.

The terms of reference and the scope of the TSB Review excluded re-investigating the Norfolk Island ditching; rather, the Review was to focus on how the investigation was conducted.

This section provides an overview of the investigation from the initial notification of the occurrence to the publication of the final report. The information is structured around the main investigation phases set out in the ATSB's Safety Investigation Quality System (SIQS): notification and assessment, data collection (investigation), analysis, and reporting.

3.2 Notification and assessment

The ATSB was notified of the occurrence on the day it happened, 18 November 2009, and the decision to investigate it was made on the following day. A team of four was initially assigned to the investigation. The investigator in charge (IIC) had an aircraft operations background; the other three team members were specialists in engineering, aircraft maintenance, and human factors.

The team did not immediately deploy to the site because the aircraft wreckage was inaccessible and the passengers and crew were returning to Australia. Investigation activities within the first several days included

- issuing protection orders to secure the wreckage and documents;

- interviewing the captain;

- liaising with the Australian Civil Aviation Safety Authority (CASA), the Norfolk Island Police, the Australian Bureau of Meteorology (BoM) for weather information, and Norfolk Island Unicom and Airservices Australia for audio recordings;

- confirming accredited representatives for New Zealand, Fiji, and Israel;

- assessing the need and capability for recovery of the cockpit voice recorder (CVR) and flight data recorder (FDR).

3.3 Data collection (November 2009 to September 2010)

3.3.1 Wreckage examination and recovery

On 29 November 2009, the team deployed to Norfolk Island to search for the wreckage, with the assistance of the Victoria Water Police. The wreckage was located, and the team returned to Australia on 02 December. The ATSB and Victoria Water Police did a video survey of the wreckage from 20 to 22 December.

Considerable research was conducted into the options and associated costs for recovering the flight recorders from the wreckage. By policy, military support was not available unless commercial options did not exist. It was determined that a portable decompression chamber would be required on site for any diving operation because of the depth of the water, which would have driven the cost of recovering the recorders to more than AUD$200 000. On 25 January 2010, the ATSB Chief Commissioner decided that this would not be an efficient or effective use of ATSB resources, given what was known about the circumstances of the ditching and the availability of other sources of data and information.

3.3.2 Witness interviews

The first interviews with the captain and first officer were conducted on 23 November and 02 December respectively. Additional ATSB interviews, telephone conversations, and email exchanges occurred with both the captain and first officer from December 2009 to August 2010. Some telephone contacts included briefings on the progress and direction of the investigation. On 19 January 2010, the pilots were interviewed during a "static reconstruction" of the event in another of the company's Westwind aircraft, with flight information and air traffic control (ATC) recordings from the Norfolk Island occurrence flight available to the crew.

In early December 2009, the Westwind fleet manager was interviewed, and another interview was conducted with the captain. The ATSB did not interview other company personnel during the investigation. The IIC briefed the company management on the issue of crews requesting weather observation updates en route but not requesting forecasts.

Interviews with the other aircraft occupants (the patient and the patient's spouse, the flight doctor and the flight nurse) were held in early December 2009. The IIC and the human factors specialist conducted most of the interviews.

The initial interviews with the flight crew explored the pilots' work schedules as well as sleep achieved during the layover in Apia. However, a comprehensive sleep–wake history going back at least 72 hours and to the last two adequate periods of restorative sleep was not immediately obtained.

3.3.3 Air traffic control recordings and weather information

Throughout December 2009, the ATSB requested and received audio recordings from the Unicom at Norfolk Island and from air navigation service providers in Australia, New Zealand, and Fiji.

From November 2009 to August 2010, as the investigation progressed, the ATSB requested and received several reports of meteorological information from the Australian Bureau of Meteorology. This included detailed wind and temperature information from FL340 to FL390 covering the entire flight path and time, provided on 14 December 2009, and low-altitude wind and temperature information for Norfolk Island from the surface to 800 hPa (approximately 6000 feet above sea level), provided on 31 March 2010.

3.3.4 Information on weather-related decision making

3.3.4.1 Survey of other operators

In January 2010, the IIC asked five operators using small jets on flights over water for the procedures they provided to crews to help them make weather-related decisions on long flights. A review of these operators' standard operating procedures (SOPs) found they did not include specific guidance related to the types of en route decision making that had been needed in the Norfolk Island occurrence. Instead, the operators relied on flight crews' experience and judgement.

3.3.4.2 Survey of students studying for the airline transport pilot licence

In January 2010, the IIC worked with instructors at an airline transport pilot licence (ATPL) training college to conduct an informal survey of ATPL candidates. The survey was intended to gauge the candidates’ understanding of what decisions were required on receipt of an en route update indicating that the weather for the arrival time at destination was forecast to be below alternate minima, but above landing minima. All of the candidates believed a diversion was warranted under these circumstances, but they were less certain about whether a diversion was legally required.

3.3.4.3 Survey of other crews

In September 2010, the IIC conducted an informal survey of pilots concerning weather and decision making on flights where no alternate aerodrome is required and no alternate fuel is carried. The sample for the survey was small and did not include Pel-Air pilots other than the occurrence crew. Participants were given a hypothetical situation involving a decision whether or not to continue to destination. They were asked what information they would gather and when, and were posed questions related to the weather criteria for a diversion. The IIC concluded that pilots did not use a consistent approach to gathering weather information and making decisions in these circumstances.

3.3.5 Release of the preliminary investigation report

On 13 January 2010, the ATSB released a seven-page preliminary investigation report.Footnote 11 The report provided extensive factual information, a discussion of the progress and direction of the investigation, and a statement that the feasibility of recovering the CVR and FDR was being assessed.

3.3.6 Information on regulatory oversight

Throughout the investigation, ATSB staff and management consulted or briefed CASA staff and management. Attachment A of the Memorandum of Understanding between the ATSB and CASA (October 2004) indicated that, upon agreement by both CASA and ATSB, a CASA officer might participate in the ATSB investigation. In this instance, no CASA officer was designated.

There was regular communication over the course of the investigation between the ATSB and CASA to share information and encourage safety action. Most of the briefings, which started right away in November 2009, were given by the ATSB to CASA on the scope and findings of the investigation. The briefings on the safety issue concerning guidance to pilots on en-route weather-related decision making took place in February 2010.

CASA had conducted a special audit of Pel-Air from 26 November to 16 December 2009, after the ditching. The IIC was concerned that reviewing the special audit report might bias the ATSB investigation, and so did not request a copy. The ATSB received a copy of the CASA special audit report in July 2012, during the DIP process.

On 28 July 2010, CASA briefed the ATSB on the findings of its regulatory investigation into the ditching, which it had done in parallel with the ATSB investigation.Footnote 12 The team leader obtained a copy of the CASA investigation report in March 2011.

An internal CASA audit report dated 01 August 2010Footnote 13 critically analyzed CASA's oversight of Pel-Air and its ability to oversee the wider industry. The ATSB had not known about this report during the investigation, and so it was not taken into account during decisions as to the scope of the investigation.

3.3.7 Investigation management in data collection phase

On 14 December 2009, the IIC provided a briefing to ATSB management that included an update on the progress and scope of the investigation, as well as an assessment of possible risks to the investigation. One issue raised at the briefing was the potential impact of the regulator conducting a parallel investigation, which was described in the briefing materials as possible role confusion and pressure to modify the scope of the ATSB investigation. This meeting was likely the source of miscommunication about how this situation would be handled. The IIC understood that the investigation should not cover the same areas as CASA, while ATSB managers believed it was clear that the ATSB investigation was fully independent. This misunderstanding persisted throughout the investigation, and as a result, only two ATSB interviews were conducted with managers and pilots at Pel-Air.

3.4 Analysis phase (February 2010 to October 2010)

By February of 2010, most of the data collection for the investigation was complete. At this point, the IIC was satisfied that a safety issue had been identified, namely the insufficient guidance given to flight crews on obtaining timely weather forecasts en route to help them make decisions when weather conditions at destination were deteriorating. The IIC drafted an analysis of the issue, including a risk assessment that categorized the issue as "critical." Because the evidence tables and risk analysis tools in the Safety Investigation Information Management System (SIIMS)Footnote 14 do not provide a version history, the TSB Review could not assess the use made of them during this period. On 01 February 2010, the team leader and the general manager (GM) decided to provide CASA with a briefing on the perceived safety issue.

The briefing was held by video conference on 03 February 2010. On 12 February, the primary contact at CASA followed up with a phone call to the IIC asking the ATSB to send a letter describing the safety issue.

Around this time, several meetings and discussions on the signing of a new memorandum of understanding (MOU) between CASA and the ATSB were documented. The MOU, which was signed on 09 February 2010, reflected the ATSB approach favouring proactive safety action over recommendations. By taking this approach, the ATSB aimed to track action taken by stakeholders on all issues determined to have a systemic impact (known as safety issues) and to issue recommendations only as a last resort when an unacceptable risk persisted.

CASA was reported to be supportive of this and to be anticipating that the Norfolk Island investigation would generate safety action. At the same time, CASA was clear that it intended to pursue regulatory action against the pilot. This was the subject of several e-mail exchanges between the IIC and the Chief Commissioner on the two agencies' differing perspectives and approaches.

Also in February 2010, an industry stakeholder contacted the GM suggesting that the possibility of fatigue should be considered in the investigation. This correspondence was forwarded to the IIC for consideration and review. The IIC in turn communicated with the human factors investigator about the possible impact of fatigue on the ability of a pilot to assimilate weather information during the flight. No fatigue analysis was prepared at this time.

On 26 February 2010, the ATSB sent a letter to CASA outlining the "critical" safety issue. The issue, based on the preliminary analysis, was described as a lack of regulation or guidance for pilots exposed to meteorological conditions that had not been forecast previously when on long flights to destinations with no nearby alternate aerodromes.

CASA sent a written response to the ATSB on 26 March 2010, agreeing that the then-current regulations were not prescriptive, but pointing out that weather-related decision making was part of all pilot training syllabi, beginning with the day-VFR (visual flight rules) syllabus. CASA expressed the view that the current published guidance material should allow pilots to make appropriate in-flight decisions, but said it was reviewing the regulations to determine whether amendments were needed.

By the beginning of May, an initial draft of the factual section of the investigation report had been compiled. On 18 May 2010, a critical investigation review was held and an analysis coach was appointed to help prepare the analysis section of the report. The ATSB's analysis framework, as well as its information management tools for recording the analysis, had recently been updated; the addition of a coach to teams had been successful in previous investigations in facilitating the use of the tools.Footnote 15

The analysis coach and the IIC worked together for a period of time, much of which was devoted to refining fuel calculations for the occurrence flight. Both the coach and the IIC found the process frustrating. The IIC felt that the coach's focus on the performance of the flight crew prevented the coach from seeing the systemic issues that the IIC considered important. The coach felt that insufficient data had been collected to identify systemic issues.

A progress meeting involving the IIC, the analysis coach, the team leader, and the GM took place on 27 July 2010. On 06 August, the coach, citing an inability to make progress on the analysis due to a lack of supporting data, asked to be, and ultimately was, removed from the investigation.

In the summer of 2010, there was a change in team leader responsible for the investigation: the original team leader was appointed to the position of assistant GM at the beginning of July, but remained involved in the Norfolk Island investigation until the handover to the new team leader was complete in mid-August. It was in the context of this handover that the inability to make progress with the analysis coaching arose. No action was taken to provide further assistance with the analysis.

The IIC continued to develop the analysis and draft report independently. A team meeting involving three of the original team members (the IIC, the human factors specialist, and the engineering specialist) was held on 09 August 2010, during which they reviewed the report findings and agreed on several amendments.

3.5 Report preparation (November 2010 to March 2012)

The IIC submitted the initial draft report to the team leader on 08 November 2010, when the team leader was deployed to a major investigation outside Australia that would continue to draw heavily on ATSB resources into late 2011.

On 12 November, the team leader assigned an investigator to conduct a peer review of the report, and it was completed on 03 December using the SIIMS format for providing peer reviewer comments. It identified concerns with the factual information presented, the safety factors analysis, the findings, and the readability of the report.

Around this time, the team leader assigned another investigator to undertake a second peer review of the report. The IIC was unaware that a second peer review had been requested. He responded to the original reviewer's comments, made changes to the report, and sent these revisions to the team leader on 10 December. On the same day, the second peer reviewer provided comments, in the form of an edited MS Word document, directly to the team leader.

There was no further action on the report until 17 February 2011, when the IIC sent a follow-up e-mail to the team leader. At this point, the IIC was still unaware that a second peer review had been undertaken and was waiting for the team leader's response to the modified report submitted in response to the first peer review.

On 18 February, the team leader forwarded the MS Word document containing the second peer reviewer's comments to the IIC and asked that they be considered.

The IIC made further revisions to the report in response. On 15 March, the IIC and the human factors investigator (the only remaining members of the investigation team) agreed that the report was ready for the team leader's review, and they submitted it the same day.

In March, April, and May of 2011, the IIC received and responded to multiple requests for updates from stakeholders, including the flight crew, the passengers, and the Bureau of Meteorology.

On 02 May, the IIC asked the team leader how to expedite the investigation. On 04 June, the team leader completed the review and returned the report to the IIC for response. The IIC made the requested modifications to the report and on 05 July, after another review by the team leader, the report was sent to the GM for review.

At this time, the IIC prepared and sent to CASA briefing sheets outlining two safety issues raised in the draft report: 1) fuel-management practices for long flights, and 2) Pel-Air crew training and oversight of flight planning for abnormal operations.

In preparation for a follow-up meeting with CASA, the draft report and supporting analysis were reviewed by an acting team leader who raised concerns to the GM about the adequacy of the data and analysis used to support the draft safety issues.

In response, the GM directed a third peer review by two operations (pilot) investigators who had not previously been involved in the investigation. They completed it on 11 August 2011, and provided six pages of comments, suggesting that the organizational issues identified in CASA's investigation report were significant and needed to be developed further in the ATSB report. The IIC reviewed the comments and provided a response to the GM on 05 September 2011.

During this period, the GM was working through a backlog of reports, many of which required considerable revision and editing. The GM was making these revisions himself, and he did the same for the draft Norfolk Island report. It was in the course of this work that the GM concluded that the available data did not substantiate classifying the safety issue on guidance for en-route weather-related decision making as significant, and modified the report to recast it as a minor safety issue. The report, with revisions and comments from the GM, was returned to the IIC, who reworked sections of the report and returned it for another review by the GM.

Internal ATSB communications during 2011 and early 2012 indicated a significant level of frustration with the lack of progress on the report and the extent of revision it required, given the number of reviews it had already undergone.

3.6 Report release (March to August 2012)

3.6.1 First DIP process

On 26 March 2012, the draft report was sent on the authority of the GM to directly involved parties (DIPs)Footnote 16 for comment. Consistent with ATSB policy, the Commission did not review the report before it was released to DIPs.

After several requests for extensions, submissions were received from all DIPs by 16 May 2012, and the DIP response process was started. The IIC responded to DIP submissions using the ATSB tool, and made modifications to the report in response to the comments. The IIC's responses were reviewed by the team leader and the GM in turn, with additional modifications at each stage. On 25 June 2012, the Commission began reviewing the draft investigation report.

As the responses to formal DIP submissions were being drafted, the ATSB received several enquiries from stakeholders about issues that were not in the report. These included the regulatory framework that permitted the occurrence flight to be conducted without an instrument flight rules (IFR) alternate aerodrome; the possibility of flight-crew fatigue; and the issues identified in CASA's special audit of Pel-Air.

On 24 May 2012, in response to an enquiry from the IIC, CASA provided an update on its plans to pursue regulatory changes that would no longer permit international aeromedical flights to be conducted under the category of aerial work, which meant that such flights would be required to identify an alternate aerodrome in the flight plan and carry alternate fuel.

Due to the significant revisions to the report, including a finding related to Pel‑Air's oversight of its operations that was added after the ATSB received the CASA special audit report, a second DIP review was started to give affected stakeholders (the captain, CASA, and Pel-Air) an opportunity to make additional comments.

3.6.2 Second DIP process

The revised draft report was released to DIPs on 16 July 2012, and submissions were received by 23 July. The IIC prepared the responses, which the team leader and the GM then reviewed. When, on 30 July 2012, the GM sent the revised draft report to the Commission for review, he remarked that the report was likely to result in significant media interest, and recommended that a media strategy be adopted.

In reviewing the report, the Commissioners expressed concern that there was insufficient factual information and analysis in the report to support the revised finding on the company's oversight of its operations. The Commission also wondered why the CASA special audit of Pel-Air had not been relied upon more extensively to support the findings.

These comments by the Commission did not result in changes to the report.

Ultimately, the finding related to Pel-Air's oversight of its operations was removed from the report, and on 17 August 2012, the report was approved for public release.

3.6.3 Public release and correction of factual errors

The report was released to the public on 30 August 2012. The following day, the ATSB was advised that the report contained a factual error with respect to the 0630Z METAR weather observation the pilot received. A correction was made to the report on the ATSB website, but the change was also incorrect. When it was advised of this second error, the ATSB corrected the METAR information again, and provided extra details to ensure readers understood that incorrect information had been transmitted to the crew with respect to the 0630Z METAR.

3.7 Analysis related to Norfolk Island investigation

3.7.1 Adequacy of Norfolk Island report

The function of the ATSB is to "improve safety and public confidence in the aviation, marine and rail modes of transport",Footnote 17 in part through the independent investigation of occurrences. The response to the Norfolk Island investigation report clearly shows that the investigation report published by the ATSB did not adequately address issues that the Australian aviation industry and members of the public expected to have been addressed, including

- the regulatory framework that allowed this flight to be conducted as aerial work without an IFR alternate aerodrome;

- the extent to which the flight planning and fuel management deficiencies observed in this occurrence extended throughout the company;

- the adequacy of CASA oversight of Pel-Air;

- the potential for flight crew fatigue and the adequacy of fatigue-management measures in place at Pel-Air;

- issues surrounding egress and the survivability of crew and passengers.

This section of the analysis will focus on how the investigation report came to be wanting in these areas. Each element of the terms of reference for the TSB Review will be addressed: application of the investigation methodology; management and governance of the investigation; investigation report processes; and external communication.

3.7.2 Application of the investigation methodology

3.7.2.1 Data collection issues

The timely collection of occurrence data is critical to a thorough analysis and an efficient investigation. Although changes in scope or new avenues of inquiry frequently make it necessary to collect additional data as an investigation progresses, the perishable nature of some data or time constraints on the investigation may make this impossible.

In the Norfolk Island investigation, the analysis of specific safety issues, including fatigue, fuel management, and company and regulatory oversight, was hampered because insufficient data had been collected, as indicated in the following sections.

3.7.2.1.1 Fatigue

Analysis of human fatigue in transportation occurrences requires sufficient data to demonstrate whether a state of sleep‑related fatigue existed, and whether it played a role in the human behaviour observed in the occurrence. The former requires a comprehensive history of the quantity and quality of sleep going back at least 72 hours, preferably to the last two periods of restorative sleep. The latter requires the human performance observed to be compared with the known effects of fatigue on performance. An understanding of the level of risk associated with fatigue in an operation also requires information about the countermeasures in place.

In the Norfolk Island investigation, some sleep and rest data were obtained in initial crew interviews, but detailed information was not obtained for the period before the occurrence crew was paired. In analyzing the potential for sleep-related human fatigue, it is the quantity and quality of sleep obtained that is of critical interest, and it is problematic to assume that an individual who is not working is well rested. Further, efforts to conduct a fatigue analysis were hampered by the fact that, during different interviews, the flight crew gave different accounts of the amount of sleep obtained on the layover.

The ATSB Safety Investigation Quality System (SIQS) included a checklist intended to provide guidance for investigators when interviewing individuals who will be DIPs. The use of the checklist was not mandatory, and the introduction to the checklist reminded investigators that a checklist might not cover all the information that should be collected in such circumstances. The checklist for obtaining sufficient history for a fatigue analysis included the following points:

Fatigue/alertness

- normal sleep pattern – any changes in last week

- normal sleep quality (difficulties falling, staying asleep)

- last sleep – start, finish, quality, any disturbances

- 2nd last sleep – start, finish, quality, any disturbances

- naps in last 48 hours

- factors affecting sleep quality – medical, personal, environmental

Recent activities

- describe work schedule last 3 days, anything unusual

- describe how feeling at time

- describe workload at time (physical, mental, time demands)

- describe non-work activities on day; night before

- recent exercise, exertions

- what meals/food on day – when

- what drank on day, when (coffee, tea, water)Footnote 18

It is not known whether the checklists were used in the crew interviews.

An analysis of fatigue data was not attempted until late in the investigation. By then, it was too late to address shortcomings in the available data. The report said that the flight crew were likely to have been fatigued on their arrival in Apia on the outbound flight due to prolonged wakefulness, but concluded that there were insufficient data to determine whether the crew were fatigued at the time of the occurrence or whether fatigue played a role in the crew's understanding of weather information received en route. The adequacy of the operator's fatigue-management measures was not addressed in the report.

3.7.2.1.2 Fuel management

The investigation examined fuel planning and in-flight decision making. Although the flight was not required to carry fuel for an alternate aerodrome, it was required to carry fuel for contingency operations. On page 39, the report states, "the flight crew departed Apia with less fuel than required to safely complete the flight in case of one engine inoperative or depressurised operations from the least favourable position during the flight."Footnote 19 However, the report does not specify how much fuel would have been required for these contingencies.

The meteorological information collected would have supported calculation of expected aircraft cruise and arrival fuel performance for comparison with the occurrence flight. However, there was little information on low- and mid-level winds, preventing investigative calculations of expected fuel performance for one‑engine-inoperative or depressurized operation. Being able to calculate the occurrence flight fuel requirements for contingency operations would have helped investigators better understand the occurrence crew's in-flight decision making.

The IIC's perspective was that he should not duplicate work being done by CASA, including interviews with other Pel-Air pilots. Consequently, the interviews did not take place, and the investigation could draw no conclusions as to whether the fuel planning and management practices on the occurrence flight were used by other Pel-Air pilots.

Three strategies had been employed to gather data to determine whether flight planning and fuel-management deficiencies observed in the occurrence were widespread. The first consisted of a sample of operators of light jets over water, who were asked to provide copies of the procedures provided to help crews make weather-related decisions on long flights. The second was a consultation with a pilot training college that provided information on ATPL candidates' understanding of the need to divert if weather was forecast to be below alternate minima at the destination aerodrome. The third consisted of responses from a sample of pilots who were given a hypothetical flight situation and asked what weather information they would obtain, when they would ask for it, and the criteria for diverting to an alternate aerodrome.

The survey of operators, while not helpful in determining how widespread the practices observed in the occurrence were within Pel-Air, did provide a baseline against which to compare Pel-Air's procedures in this area, and was relied upon extensively in communicating the safety issue to CASA in February 2010.

It is not clear whether the survey of ATPL candidates was derived from a deficiency identified in the investigation of the Norfolk Island occurrence. Nonetheless, because of the small size of the sample and the hypothetical nature of the questions posed in the survey, the data obtained did not effectively support the argument that a systemic deficiency existed.

3.7.2.1.3 Company and regulatory oversight

In this investigation, very little of the data collected documented the actions taken by the regulator to oversee Pel-Air's operations or the actions taken by Pel-Air itself. Data collection at Pel-Air consisted of interviews with the occurrence crew and the Westwind fleet manager and a review of documents Pel-Air had provided. Investigators had not interviewed additional Pel-Air crews to determine the extent to which the flight planning and fuel monitoring deficiencies observed in the occurrence existed throughout the company, and only one management interview had been conducted over the course of the investigation.

Similarly, no interviews were held with CASA operations inspectors who were familiar with the operation and oversight of Pel-Air, and several key documents, including the CASA special audit of Pel-Air, were not obtained until very late in the investigation.

The lack of data in these areas was felt throughout the investigation. Two examples of this are the removal of a finding with respect to Pel-Air's oversight of its aeromedical operations,Footnote 20 and the lack of any analysis of CASA's oversight of Pel-Air.

The reasons for inadequate data collection in these areas will be discussed below in the section on governance of the investigation.

3.7.2.2 Application of analysis tools

The ATSB Safety Investigation Guidelines Manual notes that analysis provides the link between collected data and investigation findings. It identifies four keys to an effective analysis, namely the use of well-defined concepts; a structured approach or process; a team-based approach; and knowledge of the domain being investigated.Footnote 21

The ATSB's Safety Investigation Quality System (SIQS) laid out well-defined and accepted concepts as well as a structured approach for investigations. It included events diagrams, all-factor maps based on the elements of the James Reason model of accident causation, and evidence tables that provided tests for the existence and influence of potential safety factors. As noted elsewhere in this report, the ATSB analysis tools, when used effectively, provided a structure for organizing the arguments to validate safety factors and a useful framework for encouraging a team approach to analysis.

The guidelines on analysis provided to ATSB investigators emphasized that analysis was an iterative process that might trigger additional data collection:

Analysis is an iterative process, interacting with the other major tasks involved in an investigation. Analysis may lead to a requirement to collect further data, which then needs to be analysed. During report preparation, we may identify a need to conduct further analysis, which may change the content of the final report.Footnote 22

Shortly before the Norfolk Island occurrence, the ATSB had integrated its suite of analysis tools into its information management system. This was part of an effort to improve the consistency with which the tools were used in investigations, and to address the considerable variability in the way the investigators and managers had been observed to use them.

There were a number of challenges to overcome to achieve this, including

- a low level of comfort with the investigation tools among some investigators who were required to use them infrequently;

- the perception by some investigators that the analysis tools were an unnecessary administrative burden; and

- the tendency of some investigators to conduct analysis on their own rather than employ a team to test their thinking.

Some of these challenges were observed during the review of the Norfolk Island investigation.

- The "critical" safety issue that the IIC had identified (the insufficient guidance given to flight crews on obtaining timely weather forecasts en route to help them make decisions when weather conditions at destination were deteriorating) was communicated to CASA in February 2010, but

- the issues with data collection described above meant that the information available to support the identification of a critical safety issue was weak;

- the description of the safety issue had been prepared by the IIC in isolation and recorded in MS Word documents and e-mails rather than in SIIMS;

- the underlying analysis and supporting information had not been reviewed before the safety issue was communicated to CASA;

- the description of the safety issue was reviewed as text, not against the ATSB's analysis tools, which meant that weaknesses in the material supporting the safety issue's definition as critical were not detected; and

- an opportunity to emphasize the importance of using the tools early in the investigation was missed.

- The analysis coaching provided during the Norfolk Island investigation was intended to help ease the transition to, and improve the use of, the analysis tools. Attempts were made to create a team approach, and to structure the analysis outward from the occurrence events. However, the team became bogged down by differences in perspectives on the validity of the data available to support the safety issues that had been identified previously. Consequently, the coaching broke down and the data quality issues were not resolved.

- Multiple peer reviews of the report identified weaknesses in the data supporting the safety issue described in it and questioned the validity of the analysis. These concerns were shared by the GM. The GM worked with the IIC to refine the draft safety issues, but the approach was to revise the draft report rather than perfecting the analysis using the analysis tools and then modifying the draft report based on the agreed-upon analysis.

The above examples indicate that the analysis tools were not effectively used in this investigation to

- systematically work outward from the occurrence events to underlying organizational factors;

- identify areas in which additional data collection was necessary;

- structure a team-based approach to analysis; or

- review safety issues or the draft report.

Weaknesses in the application of the ATSB analysis framework resulted in data insufficiencies not being addressed and potential systemic oversight issues not being analyzed.

3.7.2.2.1 Lack of a tool for conducting fatigue analysis

The ATSB has a number of excellent tools to provide investigators with a structure for analyzing safety factors and issues, but it does not use a specific tool to guide data collection and analysis in the area of human fatigue.

The TSB has developed a guide for investigators on this topic. While the suggested approach to analyzing fatigue uses the same tests for existence and influence as in the ATSB's analysis frameworks (i.e., one test to demonstrate that the condition existed and a second to demonstrate that it played a role in the occurrence), the TSB guide adds value by providing specific science‑based criteria for use in making fatigue-related determinations.

The guide also supports data collection in the areas of sleep-related fatigue by providing a tool for capturing a good sleep–wake history and by providing background information for investigators so they understand the nature of fatigue and why the data are important.

3.7.2.2.2 Risk analysis

The ATSB Safety Investigation Policy and Procedures Manual states that "a risk analysis will be conducted prior to initiating any ATSB safety recommendation or safety advisory notice."Footnote 23 The purpose of the risk assessment is to determine whether the safety issue carries a risk level that warrants corrective action by another organization.Footnote 24

Understanding, and being able to describe, the risk associated with a safety issue is a crucial step to being able to make a compelling argument for change. If an investigative body is to convince stakeholders that action is required, it must be able to demonstrate that the consequences of maintaining the status quo are sufficiently costly to warrant devoting resources to change it. A risk analysis provides the grounds for just this argument. As the Norfolk Island investigation shows, however, these arguments can be undermined if there is too much focus on the labels associated with a risk assessment.

A safety issue labelled "critical" is associated with an intolerable level of risk and is immediately communicated to a relevant organization. If the consequent safety action is insufficient to reduce the risk level to below critical, then a safety recommendation may be issued as soon as possible.Footnote 25 In contrast, "significant" and "minor" safety issues are associated with lower levels of risk, and other vehicles—such as recommendations made on release of the final report, or safety advisory notices—will be used to communicate them with less urgency.

The safety issue communicated to CASA in February of 2010 related to the insufficient guidance given to flight crews on obtaining timely weather forecasts en route to help them make decisions when weather conditions at destination were deteriorating. When the safety issue was presented to CASA, it was categorized as "critical", but in the final report it was described as "minor", which caused significant concern among stakeholders.

The risk label applied to a safety issue is an output of the ATSB's risk analysis process, which involves the following steps:

- Describe the worst possible scenario.

- Review existing risk controls.

- Describe the worst credible scenario.

- Determine the consequences associated with the worst credible scenario.

- Determine the likelihood of the worst credible scenario.

- Estimate the level of risk (using consequences and likelihood).

Using the worst credible scenario is important because it accounts for the risk controls that are already in place to defend the system. A risk assessment based on the unlikely failure of all the defences in place may result in an argument for change that is alarmist and unconvincing.